One thing is very clear from the Change HC failure and now Ascension is the failure on any usable backup.…

Healthcare AI News 12/6/23

News

Google announces Gemini, an “everything machine” competitor to ChatGPT that offers little new functionality to wow users, but puts AI’s key features into a single package. The company will license Gemini to Google Cloud developers and will integrate it starting this week in Google’s consumer-facing apps such as the Bard chatbot, Gmail, and YouTube.

Business

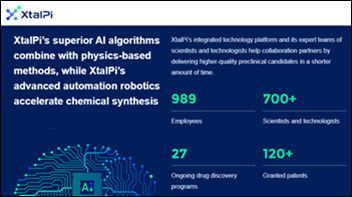

China-based AI drug discovery company XtalPi, which has raised $732 million in funding at a valuation of $2 billion, files for an IPO on the Hong Kong exchange. The three founders are MIT quantum physicists.

The Wall Street Journal reports that drugmaker Johnson & Johnson has hired 6,000 data scientists and spent hundreds of millions of dollars on AI drug discovery technology.

Research

A study finds that ChatGPT incorrectly or incompletely answered 75% of the drug-related questions that were posed to a pharmacy school’s drug information service. It also generated fake citations when asked to list its references. The authors warn that users should check its results against trusted sources.

Other

An op-ed article in Nature says that healthcare institutions should proceed cautiously in rolling out off-the-shelf proprietary large language models from “opaque corporate interests” to avoid undermining the care, privacy, and safety of patients by using tools that are hard to evaluate or could be changed or taken offline. The authors urge a more transparent and inclusive approach in which health systems researchers, clinicians, patients, and tech companies collaborate to build open source LLMs.

The American Veterinary Medical Association describes how one member is using AI to provide a second opinion on X-ray interpretation and to record visit conversations and turn them into SOAP notes.

Microsoft research VP Peter Lee, PhD provides thoughts about AI in healthcare:

- It won’t be long before doctors will refuse to practice medicine without the assistance of AI.

- Bing and ChatGPT are good at deciphering lab test results and explanation of benefits, where they can be asked “are any of these results concerning“ or “do I owe money.”

- He and his sisters asked GPT-4 to review their father’s medical records, then list the three best things to ask the specialist in their 15-minute visit.

- He says that GPT-4 is “almost superhuman” in its ability to serve as a second set of eyes for a physician in reviewing patient information and the doctor’s diagnosis by being asked, “Did I miss anything, or should I consider something else?”

- Lee warns doctors not to think of ChatGPT as a computer that has perfect recall and performs perfect calculations, but rather as a “personal intern” whose work requires review.

- AI doesn’t follow the regulatory framework of a software medical device, so it’s up to the medical community to take control of how and when it it used.

Contacts

Mr. H, Lorre, Jenn, Dr. Jayne.

Get HIStalk updates.

Send news or rumors.

Contact us.

I like how Microsoft Research VP Peter Lee characterizes the current generation of AI products. An intern whose work requires review.

Mr. H has recently posted worthy thoughts on the appropriate role for these AI products too.

My concern? People will use the products and they will actually be pretty good! But the users may not notice that the role was small and their expectations were low. Next, I foresee the following:

1). This step is optional, but it involves role expansion, with a concomitant rise in expectations. Once again, the AI performs at or above expectations;

2). Now the user is lulled into thinking the AI system is fantastic, infallible (or nearly so), an essential part of any business role, and a vital productivity enhancement. No function is out of reach! Trust in the AI is stratospheric, leading to AI use in which the AI cannot perform well. Yet, there is little or no human oversight.

The result is failure, disappointment, and finger-pointing at the AI. Yet the AI never did any of this.

Asking ChatGPT for citations is tricky but the plus version will provide web links when asked and none provided so far have been fake.